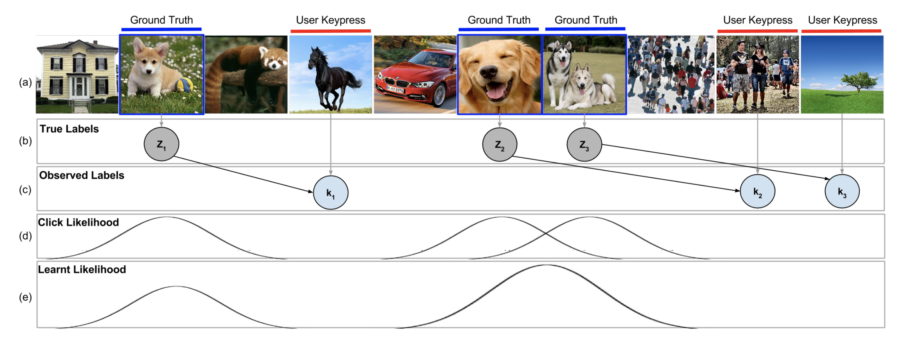

(a) Images are shown to workers at 100ms per image. Workers react whenever they see a dog. (b) The true labels are the ground truth dog images. (c) The workers’ keypresses are slow and occur several images after the dog images have already passed. We record these keypresses as the observed labels. (d) Our technique models each keypress as a delayed Gaussian to predict (e) the probability of an image containing a dog from these observed labels.

Authors

Ranjay Krishna, Kenji Hata, Stephanie Chen, Joshua Kravitz, David Ayman Shamma, Li Fei-Fei, Michael Bernstein

Abstract

Microtask crowdsourcing has enabled dataset advances in social science and machine learning, but existing crowdsourcing schemes are too expensive to scale up with the expanding volume of data. To scale and widen the applicability

of crowdsourcing, we present a technique that produces extremely rapid judgments for binary and categorical labels. Rather than punishing all errors, which causes workers to proceed slowly and deliberately, our technique speeds up workers’ judgments to the point where errors are acceptable and even expected. We demonstrate that it is possible to rectify these errors by randomizing task order and modeling response latency. We evaluate our technique on a breadth of common labeling tasks such as image verification, word similarity, sentiment analysis and topic classification. Where prior work typically achieves a 0.25× to 1× speedup over fixed majority vote, our approach often achieves an order of magnitude (10×) speedup.

The research was published in ACM Conference on Human Factors in Computing Systems on 4/20/2016. The research is supported by the Brown Institute Magic Grant for the project Visual Genome.

Access the paper: http://arxiv.org/pdf/1602.04506v1.pdf

To contact the authors, please send a message to ranjaykrishna@cs.stanford.edu