DataTalk is an experimental system that makes it easier to follow the money flowing through national campaigns for the Nov. 5 U.S. election. It allows for natural-language queries of large

News

Conference and Hackathon on Journalism, AI and the Election

[Register here] We are pleased to announce that the Brown Institute at Columbia Journalism School is partnering with Media Party to host a unique gathering of journalists, designers, entrepreneurs and

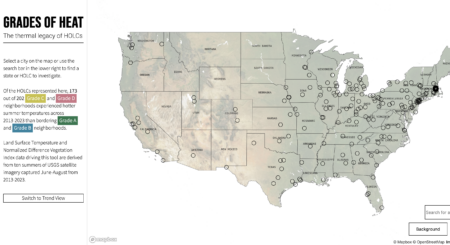

Announcing the Launch of Climate Central’s Report: “Urban Heat Hot Spots in 65 Cities”

We are excited to announce a significant contribution to Climate Central’s groundbreaking report, Urban Heat Hot Spots in 65 Cities. This comprehensive analysis sheds light on how urban heat islands

THE BROWN INSTITUTE ANNOUNCES ITS 2024-2025 MAGIC GRANT RECIPIENTS

As a collaboration between Columbia Journalism School and Stanford University’s School of Engineering, the Brown Institute for Media Innovation awards its “Magic Grants” to projects that work between the two

Brown Institute Awarded Collaboratory Grant

The Brown Institute, in partnership with the Vagelos Computational Science Center, is excited to announce a significant new development at Barnard College—a pioneering course entitled “Writing with/on Computing,” slated to

Our AI Hackathon Reviewed in CJR!

Our AI Hackathon was featured in the Columbia Journalism Review! Yona TR Golding spent time with the participants and offers a great picture of the event. She writes: This past

Updates from Our Open Source AI Hackathon

Last month, the Brown Institute hosted more than 100 journalists, computer and data scientists, and skilled product thinkers for a hackathon to explore the applications of AI for journalism and

The Winners of our Venture Challenge!

For the third consecutive year, the Brown Institute partnered with Columbia Entrepreneurship to offer a segment of the StartupColumbia Venture Challenge dedicated to media initiatives. The first round of our competition

(+1) Sterling Proffer joins our Entrepreneurs in Residence

The Brown Institute has recruited an impressive list of Entrepreneurs in Residence including Diane Chang, Ethar El-Katatney, Jennifer 8. Lee and Shazna Nessa. To the ranks we are pleased to