Major advances in computation have made mapping local news easier. What can we learn from analyzing where stories fall on a map?

In Fall 2019, the Brown Institute and The Lenfest Institute for Journalism’s Lenfest Local Lab came together to try to answer some shared questions about the relationship between geography, quality and experience in local news. The combination of our resources takes advantage of both organizations’ strengths in data, media, and innovation. The Brown Institute often focuses on databases and predictions, including projects aimed at telling stories through the large sets of public and/or private data (canners, democracy fighters) to computational tools that synthesize information from large corpuses of documents (declassification engine, datashare). Meanwhile the Lenfest Lab, an innovation program of the Lenfest Institute, launched a series of experimental products with The Philadelphia Inquirer last year that explore the possibilities of putting a readers’ location at the center of their local news experience. Lab projects included an app that displays local restaurant reviews based on proximity to the reader and an architecture news app that alerts users to stories written about places they walk by.

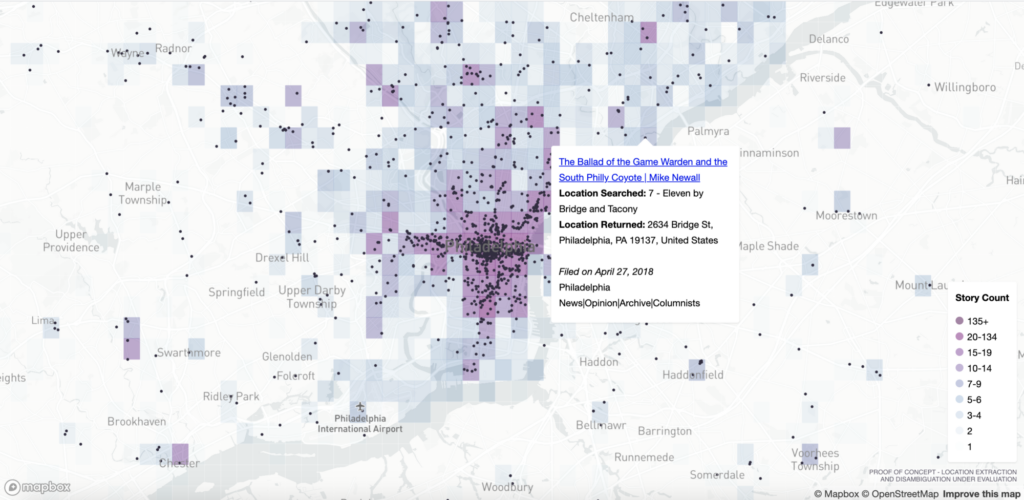

We conducted an initial review of research in the area of mapping and local news, and we agreed that developing an automated approach to identifying and mapping locations found in news article text would be a valuable exercise for our ongoing research and product development experimentation. For the Lenfest Lab, a tool like this could help the team identify opportunities for experiments beyond single-topic products for audiences within a small geographic area. For the Brown Institute, a mapping tool builds on the expertise of research staff at the institute and furthers efforts currently underway to develop open-source products for local news.

The project’s inspiration: A study of Toronto Star coverage

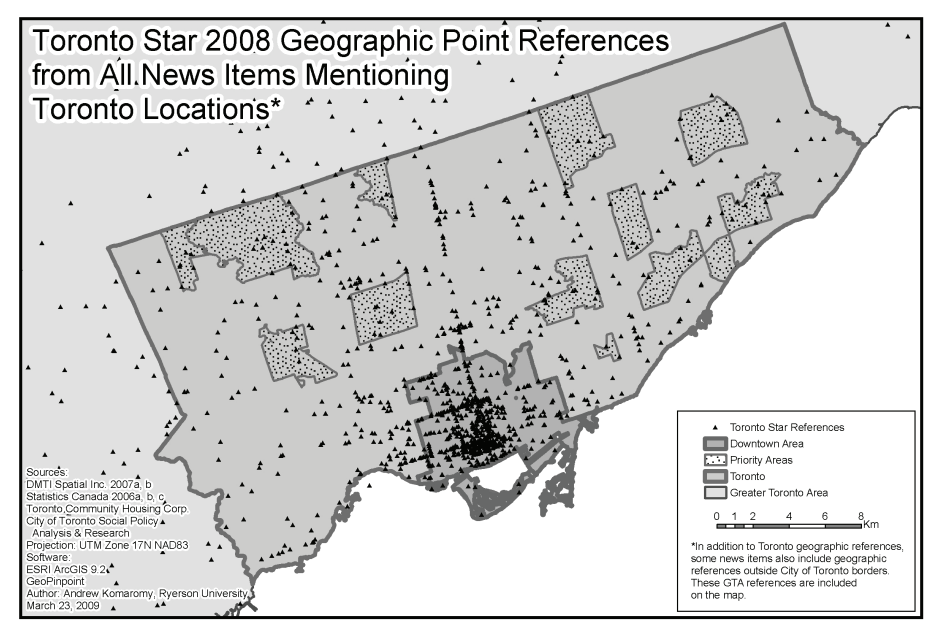

In 2009, April Lindgren of Ryerson University published a paper in the Canadian Journal of Urban Research titled ‘News, Geography and Disadvantage: Mapping Newspaper Coverage of High-needs Neighbourhoods in Toronto, Canada’. The purpose of the research was to examine Toronto Star news coverage, measuring the quantity and subject matter of coverage by geography. Specifically, the study sought to compare reporting across geographies — looking at the various ways in which the Toronto Star reported on areas that the Strong Neighbourhoods Task Force, a government-run coalition, defined as “troubled areas” or defined pockets of social need and poverty.

The study was conducted by a team of researchers who scanned 28 print editions of the Toronto Star from an eight-month period in 2008, highlighting the locations found in each article. The locations were then placed on a map and analyzed. In total, 2,731 stories were analyzed amounting to 5,697 locations. In the research paper, the authors drew conclusions that hint at the significant impact a tool of this nature could have if it could scale and be implemented by newsrooms small and large, agnostic of location. One such conclusion revealed that a disproportionately high percentage of the reporting by the Toronto Star about the “troubled areas” was crime-related. This statistic points to great opportunities to analyze and understand how news coverage shapes public opinion and represents communities. The simple act of placing news on a map allows for new types of questions to be asked and for new business opportunities to be explored.

Due to the manual nature of the study, both in the location extraction and the location mapping, the conclusions were limited, narrow, and static. Since the release of the study major advances in computation, specifically in the areas of natural language processing, machine learning, and GIShave taken place. These advances make much of this work automatable and scalable and provide new opportunities for products and research in the computational journalism ecosystem.

What’s Next

On December 15, 2020, we have received new support from Google’s GNI Innovation Challenge to expand our partnership with The Lenfest Institute and The Philadelphia Inquirer creating open-source content audit and analysis tools for local newsrooms. The tools are aimed at making content audits faster and less expensive, which could speed the advancement of diversity, equity and inclusion (DEI) in local coverage. Our cross-disciplinary partnership between a lab, a local newsroom and a university will leverage each of our organizations’ strengths in product and UX innovation, journalism and data and computation to address shared questions about the relationship between equity, quality, geography and service in local news.

Move beyond location analysis. With this new support from the Google GNI Innovation Challenge, we will begin by fine-tuning our location analysis tool and exploring how to support its use in newsrooms of all sizes and localities, from bigger cities and regions to smaller towns. We will also move beyond location analysis, looking at many more facets of content auditing, including the diversity of sources, image analysis, and which images and stories are published depending on the topic, author, or location of a story.

With this data-informed approach, newsrooms who use these auditing tools can begin to understand how communities are reflected in their coverage by examining how editorial decisions manifest themselves in language, location, sources and visuals in stories. The tools will assist newsrooms assess fairness by uncovering gaps in coverage, be it in a town or neighborhood, or with a specific community, related to gender, race, ethnicity or socio-economic background. They will highlight any topical or other coverage disparities measured relative to population, income, geographic distribution and other demographic benchmarks we develop in collaboration with newsrooms and researchers with expertise in equity and representation. All of these insights should point to opportunities for the newsroom and the business to address.

Updates on our project can be found on the main Brown Institute news feed.

Our Goal: Support the transition from one-off audits to continuous accountability.

The primary outcome we hope to achieve is a transition from one-off equity audits to automated computational processes that assist newsrooms in shaping inclusive coverage and products to engage readers. We hope that a secondary outcome is a re-imagination of news products that build on this effort of more inclusive and representative news coverage. We imagine the launches of new products that are direct responses to the insights and data provided by the tools, and we also imagine opportunities to help reporters identify new and better story opportunities, reader-facing products, and business opportunities.

The Team

The Lenfest Lab

- Sarah Schmalbach, Product Director

- Annie Mendez, Special Projects Editor

- Brent Hargrave, Engineer

- Ajay Chainani, Engineer

- Faye Teng, UX Research & Design

The Brown Institute

- Michael Krisch, Deputy Director

- Faculty Advisors from Social Work, Data Science, and Computer Science

- Cohort of Five Data Science, Computer Science, and Journalism Fellows

The Philadelphia Inquirer

- Patrick Kerkstra, Managing Editor

- Matt Boggie, CPO

- Various members of the newsroom Content Audit committees